In this case, it seems that the sweet spot is page size of 1000. The results show that there is a tradeoff between memory and speed. 10000: Timing is not much faster than with a page size of 1000, but the memory is significantly higher.1000: The timing here is about 40% faster, and the memory is low.

100: This is the default page_size, so the results are similar to our previous benchmark.

100: This is the default page_size, so the results are similar to our previous benchmark.  1: The results are similar to the results we got inserting rows one by one. We got some interesting results, let's break it down: > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 1 ) insert_execute_batch_iterator(page_size=1) Time 130.2 Memory 0.0 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 100 ) insert_execute_batch_iterator(page_size=100) Time 4.333 Memory 0.0 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 1000 ) insert_execute_batch_iterator(page_size=1000) Time 2.537 Memory 0.2265625 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 10000 ) insert_execute_batch_iterator(page_size=10000) Time 2.585 Memory 25.4453125 The Data is dirty and needs to be transformed. The Data is fetched from a remote source. To provide a real life, workable solution, we set the following ground roles: In this article we explore the best way to import messy data from remote source into PostgreSQL. Modern services might provide a decent API, but more often that not we need to fetch a file from an FTP, SFTP, S3 or some proprietary vault that works only on Windows. When we are less fortunate, we get an Excel spreadsheet or a CSV file which is always broken in some way, can't explain it.ĭata from large companies or old systems is somehow always encoded in a weird way, and the Sysadmins always think they do us a favour by zipping the files (please gzip) or break them into smaller files with random names. If we are lucky, the data is serialized as JSON or YAML. Heroku).Īny troubleshooting tips would be much appreciated, since I'm pretty much at a loss here.Fastest Way to Load Data Into PostgreSQL Using Python From two minutes to less than half a second!Īs glorified data plumbers, we are often tasked with loading data fetched from a remote source into our systems. I do not have any kind of firewall enabled, and am able to connect to public PostgreSQL instances on other providers (e.g. Is the server running on host "." (54.) and accepting

1: The results are similar to the results we got inserting rows one by one. We got some interesting results, let's break it down: > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 1 ) insert_execute_batch_iterator(page_size=1) Time 130.2 Memory 0.0 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 100 ) insert_execute_batch_iterator(page_size=100) Time 4.333 Memory 0.0 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 1000 ) insert_execute_batch_iterator(page_size=1000) Time 2.537 Memory 0.2265625 > insert_execute_batch_iterator ( connection, iter ( beers ), page_size = 10000 ) insert_execute_batch_iterator(page_size=10000) Time 2.585 Memory 25.4453125 The Data is dirty and needs to be transformed. The Data is fetched from a remote source. To provide a real life, workable solution, we set the following ground roles: In this article we explore the best way to import messy data from remote source into PostgreSQL. Modern services might provide a decent API, but more often that not we need to fetch a file from an FTP, SFTP, S3 or some proprietary vault that works only on Windows. When we are less fortunate, we get an Excel spreadsheet or a CSV file which is always broken in some way, can't explain it.ĭata from large companies or old systems is somehow always encoded in a weird way, and the Sysadmins always think they do us a favour by zipping the files (please gzip) or break them into smaller files with random names. If we are lucky, the data is serialized as JSON or YAML. Heroku).Īny troubleshooting tips would be much appreciated, since I'm pretty much at a loss here.Fastest Way to Load Data Into PostgreSQL Using Python From two minutes to less than half a second!Īs glorified data plumbers, we are often tasked with loading data fetched from a remote source into our systems. I do not have any kind of firewall enabled, and am able to connect to public PostgreSQL instances on other providers (e.g. Is the server running on host "." (54.) and accepting

Tranfer data from python to aws postgresql pro#

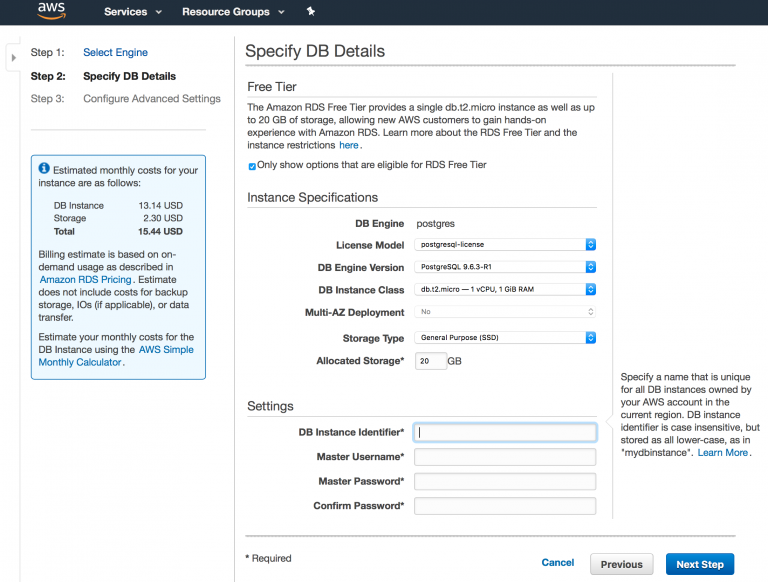

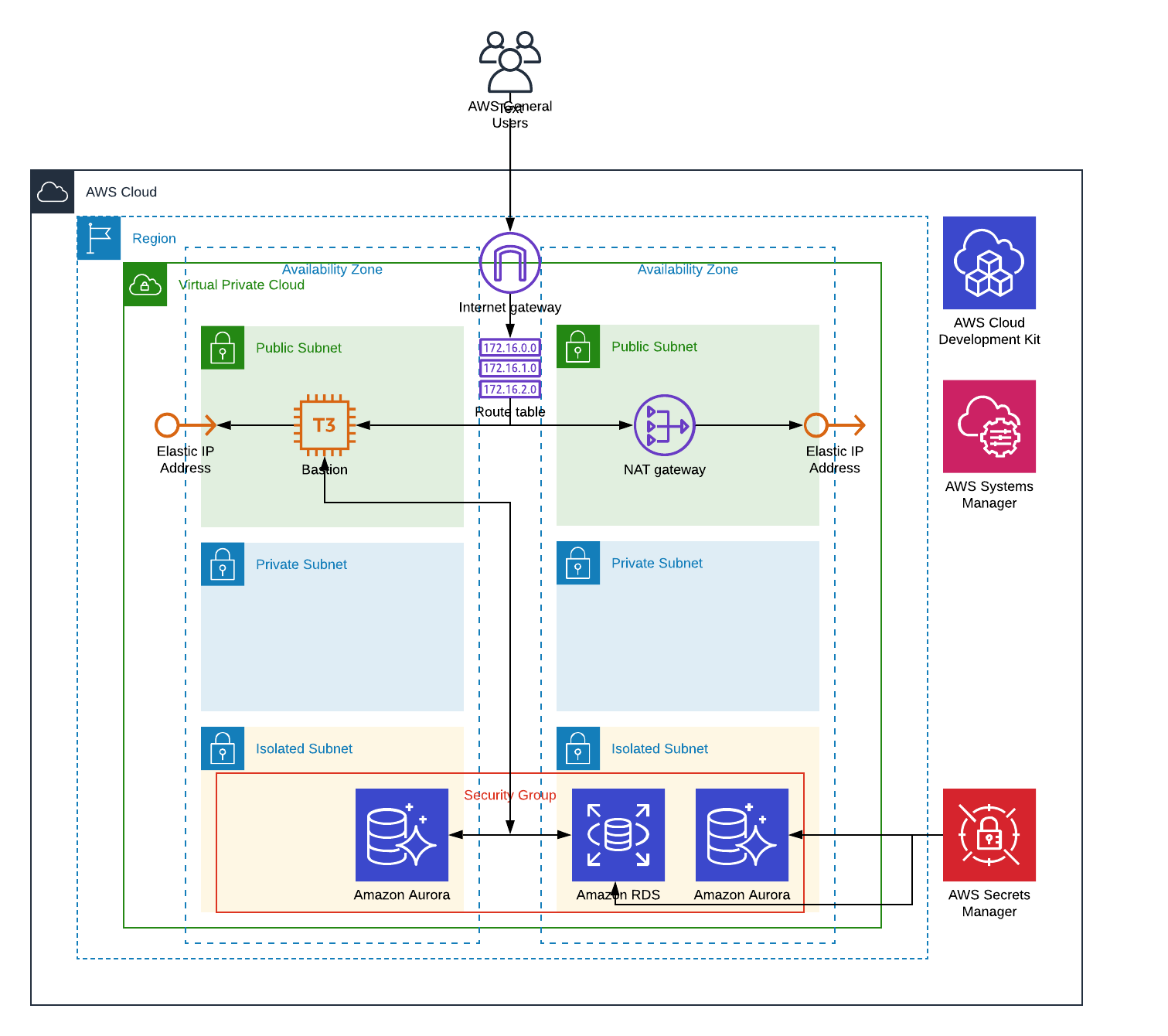

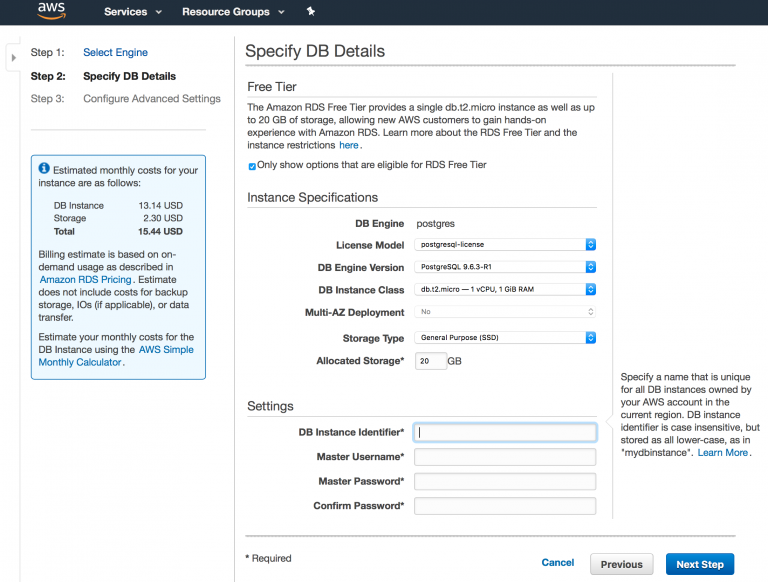

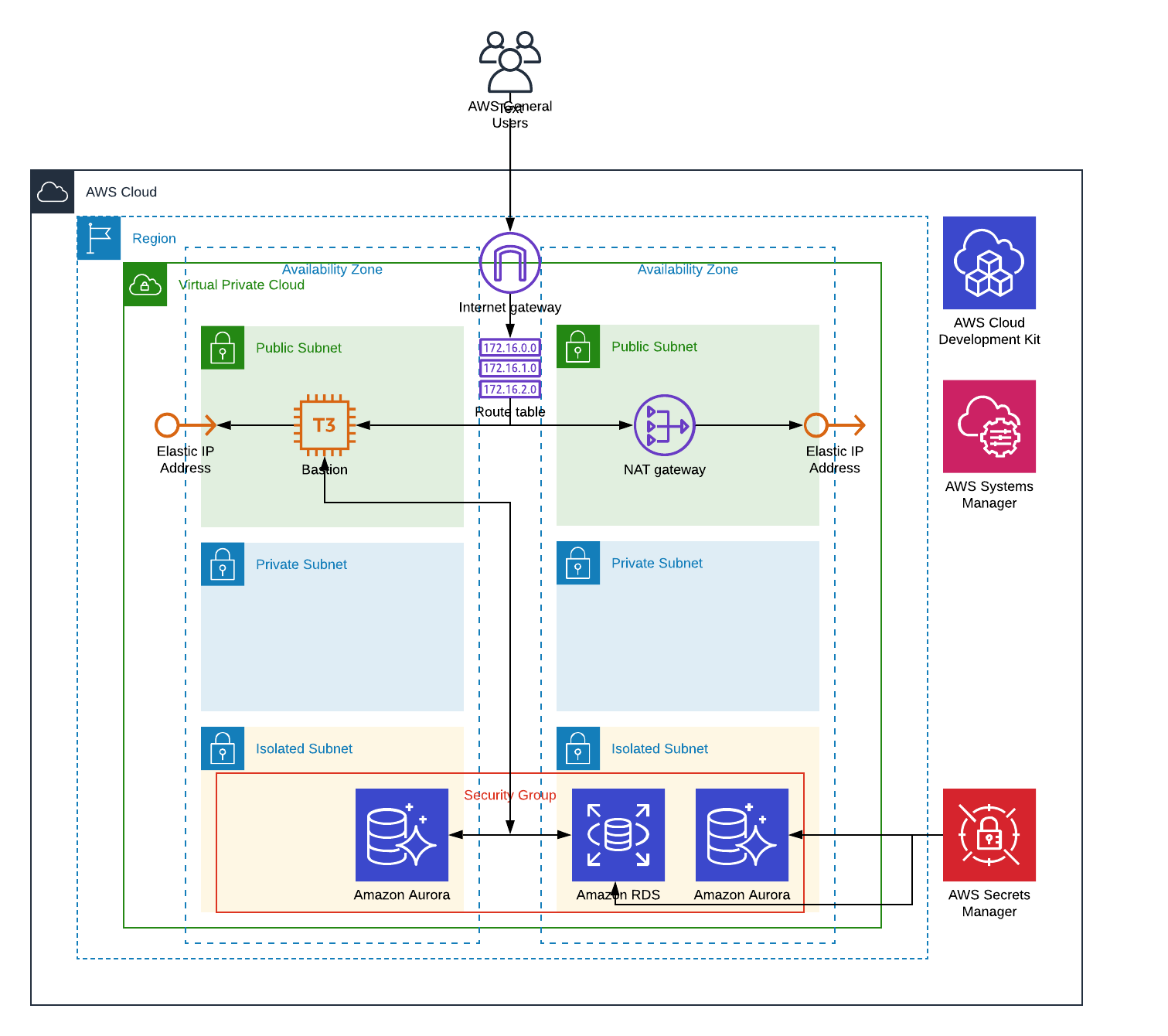

Here's the command I'm trying to use to connect: psql -host=. \Īnd this is the result I'm getting when trying to connect from a Yosemite MacBook Pro (note, it's resolving to a 54.* ip address): psql: could not connect to server: Operation timed out Here're the security group settings, note it's wide open (affirmed in the RDS settings above by the green "authorized" hint next to the endpoint): Here're the database settings, note that it's marked as Publicly Accessible: I must be missing something very straightforward - but I'm pretty lost on this. It uses the default security group, which is open for port 5432. I created a basic test PostgreSQL RDS instance in a VPC that has a single public subnet and that should be available to connect over the public internet.

0 kommentar(er)

0 kommentar(er)